by Scott Highton ©2015

Scott Highton is a pioneering VR photographer, and author of the 2010 seminal book: Virtual Reality Photography (ISBN 978-0-615-34223-8). He worked for a number of years as a cinematographer and associate producer on more than 60 wildlife and nature documentaries for PBS, NATURE, Audubon, Discovery, and TBS. He served as the original photography consultant to both Apple and IPIX during development of their respective QuickTime VR™ and IPIX™ technologies, and was the first to shoot with both technologies underwater and in other extreme environments.

This article was first published in January, 2015.

![]()

![]()

In 1994, Apple Computer introduced QuickTime™ VR (virtual reality), a technology that brought virtual environments to the computer screens of the masses. The ability to explore 360° photographic views interactively was no longer limited to high-end simulators or those with access to supercomputers and 3D projection systems. The QuickTime VR on-screen viewing windows were necessarily small (due to limited computing power), and the viewing experience was as much a novelty as it was useful. But with time came greater processing power at lower costs, delivery of vastly improved image quality, distribution via the web (rather than CDs), and increasing bandwidth for high speed connectivity.

As “consumer” level VR technologies improved, we moved from small movie windows showing a 360° cylindrical view (with limited vertical coverage) at fairly low resolutions, to complete 360°x180° spherical views with resolutions allowing almost unlimited zoom capabilities (gigapixel panoramas).

Today, it is possible to both photograph and deliver to the web-viewing public panoramic VR imagery that allows viewers to see almost any view they want of a city, national park, vehicle, or home for sale. Viewers can look left and right, up and down, zoom in or out, and change their viewing perspective simply by clicking or dragging their mouse... or using their tablet or smart phone screen... or simply moving their head while wearing any of a variety of new consumer VR goggles on the market.

![]()

Until recently, the vast majority of this panoramic VR content had been comprised of static imagery, created mostly by stitching a series of overlapping photographs into a 360°x180° spherical image, which was then projected within an interactive virtual sphere. The resulting viewer experience is one of looking at a still photograph, albeit one with no viewing boundaries and (sometimes) immense depth. For the most part, the scene and subjects being viewed don’t move. It is simply the view itself that changes as the end-user navigates within the images.

A holy grail of VR photography has been to deliver full motion video experiences within these panoramic views so that viewers can feel like they are not only sitting in the cockpit of a race car, but can witness the actual speed of the car in motion. While good still photographs can capture a peak moment of action, it’s an entirely different experience to view that same action in a video or motion sequence. Adding a full 360°x180° motion element to VR capture and display is, by any sense of the term, a game changer.

Today, it is possible to capture such 360° video with a variety of photographic equipment and techniques, ranging from arrays of low-cost GoPro video cameras to expensive custom rigs incorporating multiple high-resolution video cameras, high frame rates, and even 3D.

It’s easy to get caught up in the potential of a new technology, particularly during its infancy. In the case of VR video, or what many are now calling “cinematic VR,” we need to prioritize the end-user experience before we spend countless hours and money pursuing the latest technology, simply because it’s, well... the latest technology.

Good photography involves good visual storytelling. We must not forget that.

![]()

Any photographer who’s moved from still photography into video or motion work quickly finds that leading viewers effectively through a story is far more complex when adding that fourth dimension of time (motion) to the mix. You can’t just start shooting at 30 frames per second. One has to think about sequencing, framing, perspectives, action and reaction shots, sound, music, narration, continuity, timing, editing, and a plethora of other factors beyond those usually utilized by the still photographer. Assembling everything together to tell a story effectively is the Gordian knot of the film/video producer.

Failing to do this is like a writer with a great story to tell... but who’s unwilling to effectively sequence his or her words so that the story can be understood. If you put 500 words randomly on a page with the expectation that readers will somehow find your story within them, your effort is relatively pointless. Few of your readers will ever bother to read the jumble you’ve thrown at them.

For photographers (and other visual storytellers), it’s not enough to simply create a beautiful image. Good photographers must have a perspective, an emotion, a message – i.e. a story that they want impress upon their viewers. This can be done with a single photograph, a series of images, or a motion sequence. Good visual work is more than just documentation of a subject – and more than just “pretty” pictures.

This was a huge conceptual challenge for photographers in the early days of panoramic VR, and why so many good photographers struggled to produce more than mediocre VR imagery. A common perception was that all you had to do was position your camera in the middle of a scene and viewers would be able to find your intended story simply by looking around the image interactively. Even worse were photographers who didn’t really know what they were trying to show in a panoramic scene, but just hoped that by capturing everything, their viewers would find something to grab their interest.

These approaches require that viewers work too hard. If the photographer can’t present his or her story clearly or effectively through choice of perspective, lighting, timing, framing, etc., viewers quickly lose interest. VR images become relatively pointless.

Unfortunately, the novelty of navigating around 360° photographs wore off quickly for the general public. Photographers, interactive media producers, and clients often struggled to find justification for the significant time and effort (not to mention expense) involved in creating and distributing interactive VR content. When a story could just as effectively be told using two or three good still photographs, a short video clip, or even a few paragraphs of text, what was the point of producing and pushing VR content to viewers? Unless VR adds value, there’s little reason to produce it, particularly after the shine of new technology has worn off.

![]()

We are faced with a similar challenge today now that cinematic VR is within our collective hands. We must look at what audiences have come to expect from film and video (motion) story telling, in order to better determine what we as producers of this new content need to incorporate into it. We need to be good photographers. But we need to be good filmmakers, as well.

In traditional filmmaking and video production, the filmmaker necessarily leads his or her audience step-by-step through the story. This is done by sequencing clips, varying perspectives and composition, use of action (and non-action), direction, camera and subject movements, transitions, sound, editing, etc. If you simply throw a series of video clips onto the screen, you will lose your audience quickly – much like the writer who randomly scatters hundreds of very good words on a page and expects his or her readers to find the potentially great story within them.

Watching a movie or TV program has always been a passive activity. Audiences expect the story to unfold for them on a screen. As producers, we need to be willing to guide them through our stories, using both new and traditional filmmaking techniques, and keep them on our story paths. We need to prevent them from missing important moments because they are distracted or “looking the other way” in our VR environments when these moments occur.

We also need to be aware of the challenges viewers face when we present our stories in new media formats, such as VR headsets (Oculus Rift, Samsung Gear VR, etc.), mobile screens, tablets, and smart phones. Our stories also need to work on computer screens, home televisions, and theater screens, including IMAX and fulldome experiences.

Keep in mind that Disney developed their Circarama (later Circle-Vision) 360° film system, utilizing nine cameras and projecting on nine screens, way back in 1955. That’s 60 years ago.

IMAX motion picture technology was patented in 1970, and has seen increasing use in the decades since, including popular recent feature films. We have many decades of immersive film production experience to draw from in the cinematic VR field. There is no reason why we should feel we have to reinvent the wheel as we move forward with creating content for it in the years ahead.

![]()

Consideration #1: Viewing Formats

Not all user experiences will be the same. There’s a significant difference between sitting in a theater with 200 other viewers, and experiencing the same story privately on a personal VR headset. Watching a VR film on your living room or computer screen, or on a smart phone / tablet, provides yet another type of experience. With gyro controls, tablets and phones can be moved in a viewer’s hands to alter the viewing directions in a VR scene. A finger pinch can change the zoom level. A screen tap can link to other VR scenes. Some of these systems (certain theaters, flat screen TVs, and VR goggles) even have 3D projection capability.

Can we create interactive VR video programs that work equally well on all of these systems? Will this require some sort of industry standard for VR video content? Perhaps so. A standardized format is definitely missing today, although IMERSA is working on developing one for fulldome theaters (http://imersa.org). Discussion needs to occur on how to make a standard that can play in both monocular and binocular (3D) modes, establish a minimum resolution, provide a high enough frame rate to mitigate viewer nausea, include directional sound, and of course, provide user control of viewing direction, zoom, pauses, playback speeds/directions, etc.

A story presented on a public screen will not feasibly offer each member of an audience personal control of interactivity. On a private screen, however, personal control of viewing directions, zooms, starting/stopping, etc. will be important.

As VR authors and storytellers, we’ll want our content to work effectively on any of these delivery media. This will require a proven approach to the process of telling our stories. Will it even be possible to do this without having to create separate versions for each presentation format? Remember the hassles of having to create QuickTime, Flash, and HTML5 versions of our VR content so that web-based movies would work on both PCs and Macs? Imagine the further complexity of delivering cinematic VR if we have to provide different formats for each screen type our viewers might be using.

![]()

Consideration #2: How Much Field of View is Really Needed?

With traditional VR photography, complete 360°x180° views are generally the most desirable, because the viewer can take as much time as they want to navigate and explore these scenes in detail. Nothing really changes except the direction the viewer is looking, whether they zoom in or out, or click on a link to move to another view. These VR images are, for the most part, static – just like a photograph or painting on the wall. This invites the viewer to take his or her time looking at details, and to move on when satisfied.

Motion imagery is entirely different. Video introduces the element of time to the presentation. Timing and sequencing are necessarily dictated by the storyteller. If viewers are given too much authority to alter how they experience the story, they’re likely to miss important elements of the story line. An example would be a viewer looking in the wrong direction at the wrong time.

Opinions differ on how to best address these issues.

One perspective is that cinematic VR storytellers need to be able to limit how far away from their intended framing viewers can look (and perhaps how long viewers can be allowed to look away). This is considered “limiting the viewer.”

Cinematic VR pioneers Greg Downing and Eric Hanson are partners in Santa Monica based xRez – http://www.xrez.com .

Downing maintains that “cueing the audience” might be a better approach than limiting the viewer, as this leaves the viewer with more options and fewer limits on their potential interactivity. “Cueing” can be done with lighting & audio cues or movement of subjects in the scene that draw the viewers’ attention to where the action is (or will be) taking place. Cueing, however, requires the viewer to be an active participant in the storytelling effort, which is a radical change from the passive viewing that audiences have come to expect over the last century. Many viewers won’t be able to maintain active viewing beyond an initial viewing period.

Consider too that when using personal VR goggles, viewers are generally limited physically in how far they can turn their heads. (Viewers generally need to be in a seated position for extended use of goggles in order to avoid disorientation, nausea, and loss of balance. This holds true for theatrical experiences, as well.) There is little need for a complete spherical 360°x180° view. Whether presented in fulldome or IMAX theaters, computer screens, or VR goggles, an ultrawide – but still limited – field of view is all that’s really necessary.

Human vision provides a field of view of approximately 180° laterally, but we only register detail and critical focus in the center 10 degrees of what we’re looking at. Everything outside of 30° is considered peripheral vision. Our peripheral vision is how we collect most of our sense of visual motion.

Traditional movie screens provide a lateral field of view between 40° and 55°, depending on the viewer’s seating position. IMAX theaters offer a far more immersive experience by providing fields of view between 60° and 120° laterally, and 40° to 80° vertically (again depending on where the viewer is sitting). OMNIMAX experiences offer a 180° lateral field of view on average, and about 125° vertically (about 100° above the horizon and 25° below it). The newer format defined for fulldome is typically 180°x180°, according to the Giant Screen Cinema Association, but some installations, such as SAT in Montreal, extend to 210°, according to Downing.

https://www.youtube.com/watch?v=vXsRpy7yHfs

http://www.whiteoakinstitute.org/TheatreDes.pdf

http://www.giantscreencinema.com

If we accept that these existing theater technologies already provide deeply immersive viewer experiences, providing full 360° spherical coverage for many cinematic VR storytelling experiences is probably not necessary. In fact, making too much coverage available can invite excess distraction for our viewers. (Downing feels that “completely spherical storytelling does have a place” though.)

If we don’t need to capture full 360° spherical views, we open up countless possibilities for improved filming technique and equipment. We gain hidden or “behind the camera” areas for support, grip, sound, and lighting equipment, as well as crew placement. Cranes, dollies, Steadicams, aircraft, and other camera mounts can be readily employed, just as they are for traditional motion picture, video, IMAX, and fulldome work.

Hanson also disagrees that 360° is not needed. “At xRez, we are exploring what works, and I think full immersion is central to the VR experience” he said. “Many find it objectionable when movies restrict that.”

Downing said that Oculus suggests an office swivel chair is actually the best way to experience VR. A swivel chair allows safer viewing of a full spherical view. When you can no longer turn your head any further, you simply swivel in your chair to gain additional pan range – through 360°. This requires that the viewer have significant personal space around them, however.

Downing added that even though Oculus recommends the “office chair” environment for viewers, you often see people standing, as well.

![]()

Consideration #3: How Much User Control?

In a traditional action sequence, camera orientation and composition might change quickly. The action needs to be followed by the camera, with point of view (pov) and reaction shots intermixed into the primary camera sequences. Even with scene establishment or beauty sequences, timing, composition, viewpoint, direction, and perspective are all necessarily dictated by the storyteller. If an interactive viewer is allowed more than a minimal influence over any of these, the resulting story will be quite different – and very likely not as effective as the filmmaker’s own talents should be providing.

Current cinematic VR systems mostly relinquish this control to viewers, allowing them to look in any direction at any time. Some will argue that this is what makes the video experience truly interactive. But this puts too much responsibility (and work) on the viewer to forecast the story ahead of its telling, so he or she knows where to look, and when. Otherwise, the story can unfold outside their view, and they can miss important parts of it.

What would mitigate this are VR video interfaces designed to automatically draw viewers back to where the story is occurring at critical moments, and even to keep them from straying too far in the first place.

Consider the idea of story key frames, where the storyteller or producer could identify frames (perhaps hundreds of them) along the timeline of the program where the viewer is forced to look in a particular direction and at a particular zoom level in order to be assured of seeing the next action in the story line. No matter where users might stray in their viewing exploration between key frames, their views will be smoothly returned to where they need to be at critical times dictated by the storyteller.

For example, if action occurring in a scene needs to be followed by the viewer, such as someone walking across the room or a car driving by, the storyteller would be able to control the viewer’s framing and panning to follow this action. This control could be released, or relaxed to varying degrees, at other times when viewer exploration would be less of a distraction to the story line.

If the story takes the viewer to the edge of a high cliff, for example, it might be important to make sure that the viewer looks over that edge and into the abyss below, much as a cinematographer would do for a traditional (non-VR) sequence. In a cinematic VR format, the storyteller would control the initial framing so viewers are moved to the edge and forced to look over, but then the direction and framing control would be released so the viewer could look elsewhere and zoom in or out. The viewer could thus increase or decrease the sense of vertigo they are feeling – depending on which they might prefer.

All of this can be done by adding programming capabilities to cinematic VR authoring applications. Audiences would still have the same controls that they currently have – using their mouse or touch screens, turning their heads, etc., in order to navigate. They would simply be guided (and limited), as necessary, by the author’s programming through the movie’s progression.

![]()

Consideration #4: How Much Interactivity?

Motion picture and video production is complicated enough as it is. So too is immersive VR and multimedia production. Complexity increases geometrically when the two are combined.

Back in 1995, when photographic VR was still in its infancy, I collaborated with a wonderful creative team at RDC Interactive in Palo Alto, CA to produce one of the original commercial VR projects – the Masco Virtual Showhome. Masco was, at the time, the parent company of popular brands like Baldwin, Delta, Drexel Heritage, and Thermador. This project was a mechanism to provide an interactive catalog of Masco’s home products.

Our panoramic photography for this project involved three days of shooting in a custom-built showhome, featuring installations of key Masco products. Additional photographers shot object VR image sequences of products in the studio. The end result was an interactive title that allowed viewers to walk through a luxury home (with over 60 linked VR panorama nodes), looking in any direction and zooming in or out at will. When a Masco product appeared in a scene, viewers could click on an overlaid hot spot to see both written descriptions of the product, as well as still photos and interactive object movies. Viewers could “virtually” pick up and move objects around in order to see them from any angle. Products were also indexed for category and text based searches.

Programming for this interactive CD was relatively complex, particularly with the nascent tools available at that time. The volume of content that had to be properly interconnected was huge compared to what was standard for traditional marketing and promotion materials.

Terry Beaubois, the founder of RDC Interactive, first worked with virtual reality systems at NASA starting in 1980. He currently teaches in the Architecture Design Program at Stanford University. He reminisced about the leading edge nature of the Masco project.

“The Masco Showhome CD was an excellent guided experience,” he said. “It was shown at the National Association of Home Builders (NAHB) trade show in Houston that year, and free copies were given out to all attendees to take home.”

“Audiences were enthralled by it when it was demonstrated for them on large screens on the show floor,” Beaubois said. “However, I suspect that few people looked at it much after they got home.”

One factor in this were the ages and relative technology literacy of audience members at the time. Interactive VR was a fairly new concept for consumers. “With VR, the rate of public acceptance of the technology and the industry’s adoption of it have often been slower than we wished or expected,” he said.

After reviewing audience response to the Masco Virtual Showhome, the impression left was that the depth of interactivity offered was highly desirable to audiences, but viewers benefitted more from having someone actually guide them through it all. Part of this is attributable to the demands of active viewing and the continuous efforts required of audiences to keep up with it.

Computer games over the last two decades have led the way in providing deep layers of interactivity, including seemingly endless story line options. Incorporating full motion CGI animation, they necessarily include regular “pause points” where players can slow down, look around, and not be in danger of missing something important in the continuing narrative of the game. Such pause points are critical to successful cinematic VR projects, as well.

Even in gaming, if producers provide too many options or too many choices for viewers, they risk losing their audiences within the story. Think of the discouraging experiences gamers often have in proceeding through difficult levels of their games, sometimes stalling completely and walking away, discouraged by the experience.

“Returning to the narrative is important,” said Beaubois – particularly as we venture into cinematic VR. “It’s not the technology that’s important,” he said, “it’s the narrative – or the story line – that’s critical.”

![]()

Consideration #5: Filming Technique

Many decades of motion picture and video production have taught us significant lessons about how to shoot good footage, particularly for display on large screens. Interactive screens, which are generally viewed at close distances or projected for ultra-wide (viewer) fields of view, require particular attention to these techniques.

A) Shaky cameras and hand-held footage are, for the most part, unusable. Immersive video needs to be rock solid. Otherwise, viewers quickly become nauseous watching it. With wildly moving cameras, visual cues sent to the brain from our eyes do not match the balance and movement signals coming from our inner ear (or the seats of our pants). The result is motion sickness (the body’s biological reaction for expelling poisons, which cause similar conflicts in the brain’s reception of sensory signals). Visual cues are magnified the closer a viewing screen is to our eyes, or the wider the movement occurs in our field of view.

Certain camera movements are fine, as long as they are controlled, silky smooth, and reasonably slow. If you make your viewers feel sick, they will walk away from your story. They will only remember that watching your work was nauseating... not that your story was any good. Take advantage of your “behind the camera” spaces to utilize solid camera supports, stabilizing systems, etc., particularly if you can accept the idea that full 360° spherical coverage is not a necessity for cinematic VR.

An alternative (or addition) to this would be the inclusion of stabilization algorithms in cinematic VR post production software, similar to those available in consumer video applications like Apple’s iMovie, Final Cut Pro, and Adobe’s Premiere. Applying the same after-the-fact stabilizing methods to spherical video post production would give VR filmmakers the ability to eliminate, or at least mitigate, unsteady camera problems.

xRez’s Eric Hanson offered an alternate perspective on this, however. He said, “Immersive camera work is safest when stationary, but we are also exploring the practical limitations of movement. I think gaming in VR will certainly show active cameras to excess! There can be a portion of your audience that wants a roller coaster like experience and are not as sensitive to motion sickness.”

B) The quality of your imagery must be high – far better than you might get away with for traditional web or even broadcast video. Jello effects from rolling shutters on CMOS sensors are deadly. Resolution must be sufficient to make viewing pleasant, rather than a strain, and to provide necessary detail for zooming. Lighting and exposure need to be done well. Higher display frame rates (48 fps) have proven to be far more comfortable for audiences of immersive formats than traditional frame rates for film (24 fps) or video (30 fps).

C) Camera alignment (for multiple cameras) and stitching software must be top quality. It is incredibly distracting for viewers to pan around a video scene and see glaring stitch errors, ghost images, misalignments, or areas of inferior focus. It’s far harder to get this right when shooting with multi-camera rigs, because aligning the entrance pupils (no parallax points) of multiple lenses in the same spot is virtually impossible.

This becomes even more of a challenge when shooting with 3D multi-camera rigs, as each shooting direction requires a pair of lenses, rather than just one. Video stitching software has to be able to compensate for these errors. Having sufficient overlap (and synchronization) between cameras is essential. The visual experience for viewers needs to be seamless in order to be convincing. Anything less is just another distraction.

D) Exposure, white balance, and focus need to be consistent between cameras in multi-camera rigs. Differences in these call attention to seam areas, and again become a distraction for viewers. Moving imagery generally makes these errors far more noticeable than with still images.

E) Sound quality must be top notch, and for best results, directional. This means that the sound is both recorded and played back with two or more channels (i.e. stereo / binaural or multiple binaural), and properly synchronized with the picture. VR video authoring applications must provide the facility to synchronize the directions from which sounds are heard to the directions that the viewer is looking.

In other words, if you’re looking forward at a babbling brook, the water should sound like it’s originating from a point in front of you. As you turn toward your right, the water will visually reorient toward your left, so correspondingly, the sounds of the brook should also shift toward your left.

Good sound is one of the most overlooked aspects of film and video production, but experienced filmmakers know that great sound can be one of the best tools to cover up less-than-perfect camera work. At the same time, bad sound work can ruin even the best photography. Wind noise on a microphone, line static, bad mixing, inconsistent audio levels, and synchronization errors between audio and picture can completely ruin viewer experiences.

Cinematic VR producers today should regularly refer back to the basics of good filmmaking. The film and video industry has been around for more than a century. During that time, we’ve collectively learned volumes about what works and what doesn’t. We also have a half-century of knowledge available from large screen immersive filmmaking – from the early Disney days, to IMAX and modern fulldome production. This is the foundation of what we’re doing when we capture and present VR video.

|

||

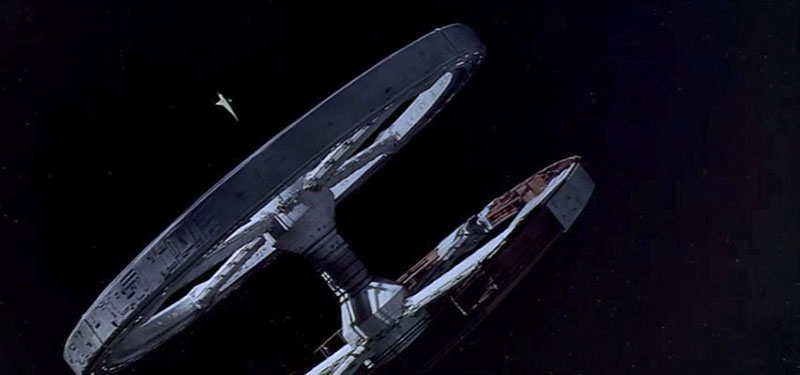

2001: A Space Odyssey |

(Click to play) |

©1968 MGM Studios |

It’s a good idea to go back and watch some timeless film classics, many of which were done for early immersive screens. Their filmmaking techniques still hold up well today. Consider Stanley Kubrick’s 2001: A Space Odyssey, or David Lean’s Lawrence of Arabia, or Patton, all produced in the 1960s. You’ll see little, if any, zooming. They were shot in ultra-wide formats with rock solid cameras (even on dollies, tracks, cranes, etc.), including long duration shots that established mood/location and allowed time for the viewers’ eyes to explore the wide scenes.

|

||

Lawrence of Arabia |

(Click to play) |

©1962 Columbia Pictures |

They utilized effective lighting, great directional sound, incredible editing, and exquisite visual story telling technique. The wide-screen formats allowed audiences to be fully immersed in the visual feast before them, but did not put so many options or distractions in viewers’ paths that they would lose track of the story line.

|

||

Patton |

(Click to play) |

©1969 20th Century Fox |

These movies still hold up well today because, while they utilized new technologies for maximizing the immersive viewer experience, they were still great motion pictures... told by great storytellers.

![]()

As we move into cinematic virtual reality, we should follow this track. We should be asking ourselves, “Will the VR cinema we're creating today be good enough to be similarly respected 50 years from now?” If not, consider what we should improve in our production techniques to make it so.

![]()